The departure of one of Black in AI’s founders, Timnit Gebru, from Google in November 2020 provoked outrage within the computer science community.

The prominent artificial intelligence (AI) researcher claimed that the company fired her after she declined to remove her name from a paper on the carbon footprint of language models in AI research. The paper also questioned whether this technology could harm marginalized groups. Two of Gebru’s colleagues rapidly quit over her treatment, a third resigned last month, citing the ongoing controversy, and many others have signed letters of protest. The now-notorious paper has been drawing new eyes to AI’s unintended social as well as environmental impacts.

Gebru and her colleagues are not the first to address this topic. “Green AI” research – AI research that’s more environmentally friendly and inclusive – explores AI’s carbon footprint and ways to reduce it. Green AI researchers see a trend in machine learning (ML) toward programs that require increasing power and that favour accuracy over efficiency, resulting in big experiments run many times without attention to their digital carbon footprints. This is not only bad for the environment; it also makes ML research prohibitively expensive for under-resourced researchers. While green AI addresses many applications of ML, Gebru and her colleagues focused on natural language processing (NLP) models, which improve machine interactions with human languages. In order to train one of the NLP tools powering Google Search, computer scientists run programs that can expend the same amount of energy as a trans-American flight.

Those of us who aren’t computer scientists may have a difficult time disentangling this carbon-footprint revelation from claims about the carbon-saving potential of AI in smart grids, emissions monitoring or precision forestry. The answer is that AI is neither “all good” nor “all bad” for the environment. There are a few core, simple lessons at the heart of this issue: methods matter; hardware, cloud storage providers and regional energy sources matter more; and – as Gebru and her colleagues point out – it’s worth weighing the social and environmental impacts of digital activity against its benefits.

Methods matter

There are many ways for a machine to learn something new. A machine designed to generate English text could be given the rules of grammar, or it could self-educate from a data set of English writing, find patterns and apply what it has learned. The self-educating machine could train itself on a limited data set, such as movie scripts, or researchers could tell it to search the entire English-language internet. If the machine was carefully designed and debugged, perhaps the process could be finished – if not, the researcher might need to repeat their work, with a significant carbon cost. After the machine has processed its data set, another researcher could use the machine as is or re-educate it with a new data set or modified instructions.

Unsurprisingly, the amount of energy it takes to train a machine depends significantly on all these choices, and on hardware. Like with other notable digital carbon footprints – such as Bitcoin mining – green AI research tells us to consider what we’re trying to achieve, and whether the same goal could be reached more efficiently.

Operational decisions matter more

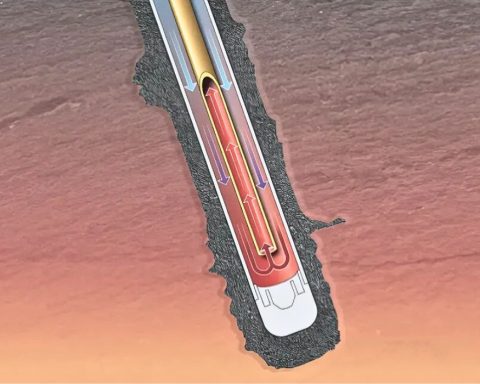

Some programs, like many used to power Google Search, are so large that they can’t be run quickly on a personal computer, so NLP researchers often outsource them to big cloud computing centres. Amazon Web Services (AWS), Microsoft Azure and Google Cloud are the three largest cloud infrastructure providers worldwide, followed by Alibaba and IBM.

A cloud computing centre’s efficiency and regional placement significantly impact its emissions. North America alone has a huge range: one server in Quebec (which is dominated by low-carbon hydroelectricity) emits an equivalent of 20 grams of carbon dioxide per kilowatt hour, while another in Iowa (where, after wind energy, coal is the most common electricity source) emits almost 737 grams – more than 35 times more. Electricity sources are the biggest drivers of these differences: low-carbon energy infrastructure can significantly reduce the environmental cost of electricity in a region. Accordingly, where major cloud-storage providers build data centres has a big impact on their climate-friendliness.

Technology users might lack control over the type of energy in their region, but they can select their cloud provider carefully. In 2017, of the top three cloud providers, Google was estimated to have the highest proportion of renewable energy integration (56%) followed by Microsoft (32%) and Amazon-AWS (17%). The same companies have each set carbon neutrality targets, though they all still have data centres that rely on fossil fuels and purchase varying quantities of renewable energy credits (RECs) in atonement.

Social impact endgame matters

It’s notoriously difficult to foresee the social impact of new technology. Like the recruiting algorithm that learned to downgrade women’s resumés because of biased training data, AI can reinforce and worsen human inequality, in the guise of scientific objectivity. Alternatively, it can identify those biases and help us solve them. Gebru and her colleagues discuss language models that have learned racism and the issue of focusing only on dominant, well-resourced languages. They note that the negative impact of climate change is most likely to reach speakers of Dhivehi (the official language of the Maldives) or Sudanese Arabic long before speakers of English.

Researchers in other fields that impact humans, such as social or medical sciences, have long had to balance social benefits and harms in their research design. Some AI conferences and publications have begun to require social impact analyses, but greening AI research asks further: What are the environmental impacts of the experiments being run? Do the experiments improve the lives of those whom climate change will hurt the mosFinally, how does a consumer make an informed choice about what digital tools and infrastructure will minimize their carbon footprint online? While digital carbon footprints are becoming better understood, we need broader public education and open access data from companies that develop and provide AI-powered tools and cloud infrastructure. That way customers can make informed decisions about the social and environmental impacts of the technologies we use daily.

By Faun Rice, senior research and policy analyst at Information and Communications Technology Council (ICTC). Figures by Akshay Kotak, senior economist at ICTC.